CI/CD Pipeline Security & Shifting Left

Recently I have been doing far more AppSec work in Agile, Lean environment. I also took the SANS SEC 540 course Cloud Security and DevSecOps Automation which has lots of really great exercises but I like to try to create some of my own examples. In this one I wanted to create a CI/CD (Continuous Integration/Delivery) pipeline that integrates Static Analysis Software Testing (SAST) and Software Composition Analysis (SCA) and finally some Postman testing of API endpoints that include negative test (more on this later).

To start with I needed some example code so I found an example and adapted it to my own needs. The code along with the other supporting files like the Jenkinsfile can found on my GitHub under Planetary-API. Please note, I don't claim to be the best coder in the world :-)

I chose an API partly for simplicity of the examples and partly because there are things I wanted to explore further later in terms of bearer authentication and the use of JWTs (for another project).

The source code is fully contained within the app.py file. The API endpoint routes are shown below.

IDE Plugins & Commit/Push Hooks

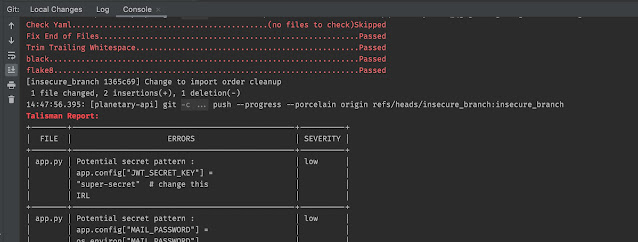

I added the Pre-commit framework for managing hooks into the git operations. It's as simple as pip install pre-commit. Once installed you just need to configure what actions you want to take on a commit to the repo.

The first hook runs Black the Python Formatter which mostly fixes anything that might be found by the second hook. The second hook, Flake8 enforces style, preventing a commit if it finds any questionable formatting. Readable code is securable code.

Snyk in the IDE (scans Requirements.txt file for Python imports), and recently Snyk added support for Python Static Analysis Security Testing (SAST). Although Snyk is a commercial tool, it has a free tier that can be used by anyone.

|

| Snyk IDE Integration |

Integrating security tools into the IDE either using plugins or by hooking into Git SCM brings these tools closer to the developer as they code.

Pre-Push Hook

Talisman checks for secrets and sensitive information and checks it does not leave the developers workstation and get committed to the repository. I have it installed as pre-push hook so that it does it's checks after commit but before anything is pushed to GitHub.

Black and Flake8 checks can also be seen passing as the commit happens. Talisman is called just before the code is pushed to GitHub and in this case preventing it from being pushed due to the findings.

CI/CD Pipeline

Now that I have some security testing for code and dependencies in the IDE, it's time to look at how to do all the testing during the pipeline build. As the pipeline brings together all of the development we want SAST and SCA to be run against the whole project. It's also the case that developers don't always use or take notice of the IDE plugins and Git hooks that they could and it's vital to uncover all of the potential vulnerabilities within the project for an overall understanding of risk.

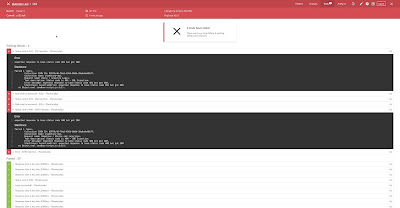

Here I'm using Jenkins for Continuous Integration (CI) utilising the Blue Ocean Jenkins plugin which keeps all the configuration in a Jenkinsfile which means it's under source control just like the code.

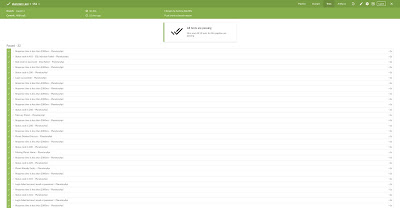

The Jenkinsfile is loaded into the Blue Ocean plugin from SCM and the various steps configured in the Jenkinsfile execute. Blue Ocean produces a graphical representation of the pipeline and shows each step and it's associated output.

As can be seen some steps can run in parallel (the number of Jenkins build agents determines the maximum number) if they do not depend on the outcome of a previous stage. Here Run and SAST are running simultaneously. The build step checks out the source code from the repository builds the Docker image, creates the database and seeds some initial data.

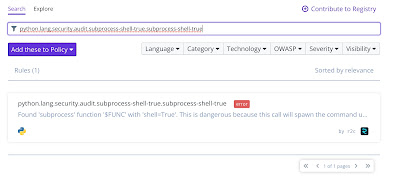

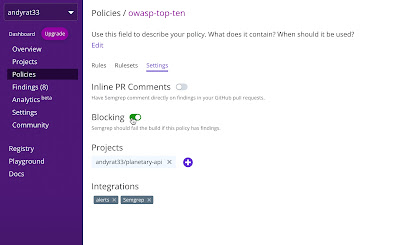

The Static Analysis Security Testing (SAST) uses Semgrep in the pipeline with results shown in the Semgrep.io portal. Semgrep is also a commercial product with a very useable free tier. I have used more traditional SAST products that can be cumbersome to get started with and run. Semgrep is simple to get started with and I really like that the Rules are crowd-sourced and you can write your own. This makes building guard-rails to keep developers on a defined paved road by specifying specific function usage for example a lot easier.

Here is the stage from the Jenkinsfile:

As you can see Docker is used to run Semgrep as part of the build pipeline. The Semgrep Dashboard will contain the findings from the rules. In this case I'm just using the OWASP Top 10 ruleset. If Semgrep detects any problems the build will fail and stop.

Once a security issue is found in the code the build pipelines ends. Before continuing, let's just have a look at the vulnerabilities I have found in the code. There is a description in the output from the Semgrep build stage but the results are easier to read in the Semgrep Dashboard.

Each vulnerability identified can be selected for a detailed description.

|

| Findings Detail |

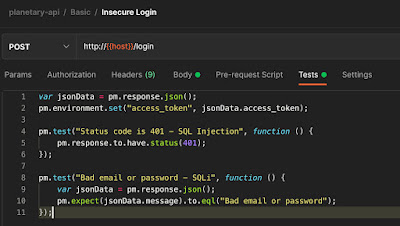

Security Unit Testing

Dependency Tracking

I imported a Manual Container Scan from Trivy into DefectDojo, Trivvy examines all of the software contained in the Docker image that was created to run the API. There are a number of other vulnerabilities identified with the software used in the image. Ideally, this type of scan should also be included in the pipeline.

I mentioned earlier that Snyk had another capability and it can also scan the Docker container image for vulnerable applications installed in the image like Trivvy. I ran the following and manually imported it into DefectDojo.

Once that is done, I could have imported the scan manually into DefectDojo as well, however the results were similar to the Trivvy scan so it would have been duplication. Using a command line scan could be just as useful on a developer machine to get more direct feedback on the docker image without having to wait for next pipeline build.

The Snyk vulnerabilities identified from the python requirements.txt have also been imported into DefectDojo and can be seen here alongside the ones imported from Dependency-Track during build pipeline.

They are similar but not identical. Snyk offering perhaps more advanced analysis and intelligence in terms of identification and impact.This blog post is really only getting started in terms of what can be done to secure the applications and CI/CD pipelines, there is so much more that needs to be done on top of what is a very simple example; consider SEC540 if you'd like to know more. It has provided me with many hours of tinkering to get this far!

One of things that does seem to be gaining traction in the industry is the use of the Software Bill of Materials or SBOM. I think the day is coming where software companies may be required to handover this for inspection by prospective customers of their products.

Comments

Post a Comment