From Bro to Log Parser Lizard to Security Visualisation

Recently I had to do some work with packet captures and system logs and decided to use Log Parser Lizard to examine the syslog files and the Bro logs I got from parsing the pcap's. Log Parser Lizard is a GUI for the brilliant MS Log Parser utility. I know a lot of us of complain that Windows doesn't have our favourite text processing utilities like grep/sed/awk etc but the addition of MS Log Parser more than makes up for the loss. Adding Log Parser Lizard provides a really cool way of analysing data for forensics and much more. For anyone new to MS Log Parser there is a great book entitled Microsoft Log Parser Toolkit available on Amazon. This is a great solution for ad-hoc data analysis when you don't have the data in ELSA or logstash, but more than that, it provides a minimal capability for exploratory data analysis without requiring the 'R' statistical language or Python with the SciPy stack. Even if ultimately you need to use either of those, these techniques could still be useful in curating and munging data before importing into either 'R' or Python SciPy.

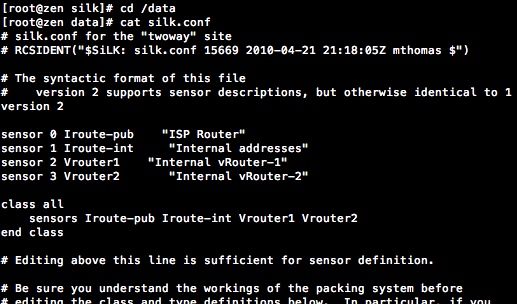

To start with I need to use Bro to work it's magic with the packet capture file and then set the timestamp in the file. Bro records the timestamp as an offset from the Unix epoch but that's not going to work for me when I use Log Parser to examine the data, so here it's set to UTC on my Security Onion VM.

Now I have the conn.log file from Bro with a timestamp, I just need to tidy up the header section of the file to leave just the column headings.

Now that I have the Bro connections log with a timestamp and a header row. It’s ready to use with log parser lizard. Next I need to fire-up Log Parser Lizard (note that you need to install MS Log Parser first before installing Log Parser Lizard), then I created a new query group for my queries and then created a new query. Once that's done I set the Input Log Format to TSV.

The input format properties are set to the default values and I don't need change anything here.

The first query is used to confirm that everything is working properly.

As you can see the the output is displayed as a series of columns with Bro field name as the column header. MS Log Parser uses SQL like syntax to extract and manipulate data in the file. There is a considerable amount of functionality and flexibility within MS Log Parser and I will only scratch the surface in this post. The 'TOP 100' part of the query just selects the first one hundred rows of the file without any aggregate functions. It's useful if you just want to get a view of the data to see what you have and check everything works. I usually save the first query I create for a given file and then use 'Save As' each time I want to create a new query as it saves setting the Input Format each time.

I started off by just looking at how many times an IP address appears in the data set and producing a graph using Log Parser Lizards built-in graphing capability.

I've used the COUNT() function to show how many times an IP address appears a source address (id.orig_h). The graphing capability in Log Parser Lizard is extensive, but if it does not do what's needed it's really easy to export data for use with other graphing tools. The next query uses the ROLLUP operator to create a summary for each source IP address and overall total.

In this next query I wanted to see all the failed, reset or rejected connections. There is a list of definitions for conn_state in the Bro documentation. In this query the I'm only looking for ports below 1024 with the desired connection states. The GROUP BY ROLLUP operator again provides additional rows for grand total and a summary total for each source IP.

There is one host in the list with a source address of 172.31.254.165 that seems have more failed connections to more destinations than any of the others. This was caused by a slow (T2) nmap scan originating from that host. The next queries output is going to be used to create a Treemap graph. The query looks at connections states where data was transferred and sets a lower limit for the amount of bytes transferred. The output is formatted specifically to make it easier to load into Treemap.

One of the neat features of Log Parser Lizard is that you can put the displayed table into 'Edit Mode' and in this case I've used that to add the name of the service for Port 3306 (MySQL) as Bro didn't output that. It's easy to export data from inside Log Parser Lizard, select the Export tab and then click the 'Save Results to File' button. For Treemap the file needs to be formatted as tab separated value (TSV). For Treemap to understand the file I will make a small adjustment to the header. Only the first two columns need the header row titles so the rest can be deleted. Treemap also needs to know what the data types are for the first two columns. To to do this I add a row under the headers and put STRING and INTEGER (uppercase is required) separated by a tab. The Port numbers are treated as a STRING, essentially they are a name not a numeric value.

At this point the file has a .tsv extension which needs to be renamed to either .txt or .tm3 for Treemap to open it. The end result shown here has had colouring turned on for the Port so each port shown has it's own unique colour.

One of the cool things about the Treemap program is that you can drill down, filter, set bin sizes for colouring, and set the variable used for scaling. I think Treemap works best when used as a interactive tool rather than just used for producing a static image. This next screenshot shows a drill down to a single source host and the connections it made. This time the sizing of the boxes uses the TotalBytes field to show comparative sizes of the traffic to each host.

It's also easy to export data to use in Afterglow. Afterglow uses three columns, Src IP, Event (Port), Dst IP and optionally a fourth column for node sizes. One problem can be that one very large value in TotalBytes will mean that everything else looks so small it's virtually unreadable. To solve this I've used the LOG10() function to create a base 10 logarithmic scale in the query. Afterglow needs the output in CSV format and the only change the file requires once exported is have the column headers line at the top removed.

The resulting graphic shows the ports scaled better for a comparative view. As you can see there is a connection from 172.31.254.165 to 172.31.253.102 on port 445 and then a connection from that system back to 172.31.254.165 on port 4444. In this case that was a reverse TCP meterpreter connection back to the attacking host.

While I was figuring out some of this stuff I found Applied Security Monitoring to be a really useful resource, they also have a really good site that seems to be adding evermore content. Highly recommended. I also have been working through Data-Driven Security: Analysis, Visualization and Dashboards and although I have no background in statistics it seems like a good introductory text.

To start with I need to use Bro to work it's magic with the packet capture file and then set the timestamp in the file. Bro records the timestamp as an offset from the Unix epoch but that's not going to work for me when I use Log Parser to examine the data, so here it's set to UTC on my Security Onion VM.

Now I have the conn.log file from Bro with a timestamp, I just need to tidy up the header section of the file to leave just the column headings.

Now that I have the Bro connections log with a timestamp and a header row. It’s ready to use with log parser lizard. Next I need to fire-up Log Parser Lizard (note that you need to install MS Log Parser first before installing Log Parser Lizard), then I created a new query group for my queries and then created a new query. Once that's done I set the Input Log Format to TSV.

The input format properties are set to the default values and I don't need change anything here.

The first query is used to confirm that everything is working properly.

SELECT TOP 100 * FROM 'C:\Users\andy\Downloads\blog\bro_conn.log'

As you can see the the output is displayed as a series of columns with Bro field name as the column header. MS Log Parser uses SQL like syntax to extract and manipulate data in the file. There is a considerable amount of functionality and flexibility within MS Log Parser and I will only scratch the surface in this post. The 'TOP 100' part of the query just selects the first one hundred rows of the file without any aggregate functions. It's useful if you just want to get a view of the data to see what you have and check everything works. I usually save the first query I create for a given file and then use 'Save As' each time I want to create a new query as it saves setting the Input Format each time.

I started off by just looking at how many times an IP address appears in the data set and producing a graph using Log Parser Lizards built-in graphing capability.

I've used the COUNT() function to show how many times an IP address appears a source address (id.orig_h). The graphing capability in Log Parser Lizard is extensive, but if it does not do what's needed it's really easy to export data for use with other graphing tools. The next query uses the ROLLUP operator to create a summary for each source IP address and overall total.

In this next query I wanted to see all the failed, reset or rejected connections. There is a list of definitions for conn_state in the Bro documentation. In this query the I'm only looking for ports below 1024 with the desired connection states. The GROUP BY ROLLUP operator again provides additional rows for grand total and a summary total for each source IP.

There is one host in the list with a source address of 172.31.254.165 that seems have more failed connections to more destinations than any of the others. This was caused by a slow (T2) nmap scan originating from that host. The next queries output is going to be used to create a Treemap graph. The query looks at connections states where data was transferred and sets a lower limit for the amount of bytes transferred. The output is formatted specifically to make it easier to load into Treemap.

One of the neat features of Log Parser Lizard is that you can put the displayed table into 'Edit Mode' and in this case I've used that to add the name of the service for Port 3306 (MySQL) as Bro didn't output that. It's easy to export data from inside Log Parser Lizard, select the Export tab and then click the 'Save Results to File' button. For Treemap the file needs to be formatted as tab separated value (TSV). For Treemap to understand the file I will make a small adjustment to the header. Only the first two columns need the header row titles so the rest can be deleted. Treemap also needs to know what the data types are for the first two columns. To to do this I add a row under the headers and put STRING and INTEGER (uppercase is required) separated by a tab. The Port numbers are treated as a STRING, essentially they are a name not a numeric value.

At this point the file has a .tsv extension which needs to be renamed to either .txt or .tm3 for Treemap to open it. The end result shown here has had colouring turned on for the Port so each port shown has it's own unique colour.

One of the cool things about the Treemap program is that you can drill down, filter, set bin sizes for colouring, and set the variable used for scaling. I think Treemap works best when used as a interactive tool rather than just used for producing a static image. This next screenshot shows a drill down to a single source host and the connections it made. This time the sizing of the boxes uses the TotalBytes field to show comparative sizes of the traffic to each host.

It's also easy to export data to use in Afterglow. Afterglow uses three columns, Src IP, Event (Port), Dst IP and optionally a fourth column for node sizes. One problem can be that one very large value in TotalBytes will mean that everything else looks so small it's virtually unreadable. To solve this I've used the LOG10() function to create a base 10 logarithmic scale in the query. Afterglow needs the output in CSV format and the only change the file requires once exported is have the column headers line at the top removed.

The resulting graphic shows the ports scaled better for a comparative view. As you can see there is a connection from 172.31.254.165 to 172.31.253.102 on port 445 and then a connection from that system back to 172.31.254.165 on port 4444. In this case that was a reverse TCP meterpreter connection back to the attacking host.

And Finally...a couple of great books

While I was figuring out some of this stuff I found Applied Security Monitoring to be a really useful resource, they also have a really good site that seems to be adding evermore content. Highly recommended. I also have been working through Data-Driven Security: Analysis, Visualization and Dashboards and although I have no background in statistics it seems like a good introductory text.

Comments

Post a Comment