Netflow analysis with SiLK - Part 2 Detection

SiLK provides numerous command line tools used to query Netflow records in the data store. The primary query tool is rwfilter. The rwfilter command provides a way of partitioning the flows and selecting data according to your needs. SiLK can be used to get an understanding of normal traffic and interactions on the network. This is a useful exercise in itself as it provides contextual information and situational awareness, not only for information security but also other parts of the business. An understanding of the network, it’s architecture and usage provides a foundation for troubleshooting and allows changes to be assessed in the light of solid information.

Why use Netflow data? Netflow and IPFIX data has one major advantage over full packet capture in that it takes very little space to store by comparison. It can provide a record of traffic in the network going back months or even years which is nearly impossible for full packet capture. This could prove invaluable in the case of forensics where a compromise could have started months prior to discovery.

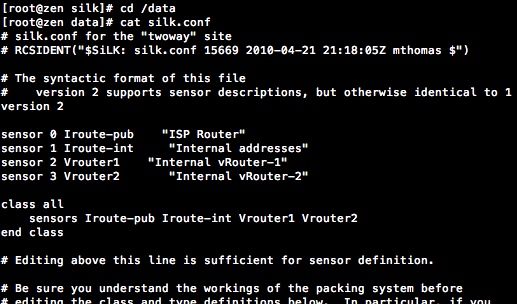

In this example I looked at how to determine which machines are running database management software. Of course it is possible that databases and other application might be running on non-standard ports, so it might be useful to determine this up front, using a packet capture and some traffic analysis. However, for the purpose of this exercise I’ll assume all DBMS are running on standard ports.

Using rwfilter I selected the sensor of interest and the ‘out’ direction looking for typical DBMS source ports for the dates in question.

As can be seen from the output I have found one MySQL server. Using the —pass=stdout option with rwfilter allows the binary records to be piped into another command from the SiLK suite; in this case rwstats. The rwstats command provides a statistical breakdown showing here the percentage of flows from the source ports I specified. The rwstats command can be used to produce top-x talkers on the network or summarise various data produced by rwfilter.

It should be noted that I intended to specify a range of 1 day by setting the —start-date and —end-date, however, because the time precision is to the hour (15=15:00) I have actually specified 1 day and 1 hour because all the minutes in the end hour are included.

Now that I have identified the database server and the type (MySQL) it it’s time to look at the flows in more detail.

Now that I know the server IP address and port I can set those as selection parameters in rwfilter and look at the percentage of traffic by client for just the 13th of November. Changing rwstats fields shows the percentage for the destination IP address as specified in the —fields=dip option. It’s easy to reverse this and look at the percentage of bytes sent to the server by the clients.

The —type=in is now specified to indicate the inbound flows. Similarly the —sport=3306 changed to —dport=3306 and source address —saddress=172.31.253.102 changes to —daddress=172.31.253.102. Flows are unidirectional so it’s convenient to look at them as two halves of a single conversation. If I wanted to see both parts at once I could use —type=in,out but I would have to use —aport=3306 to specify any port and -any-address=172.31253.102 to select both parts of the conversation.

Piping the output to rwuniq this time to see a more detailed view of the interactions between client and server. The rwuniq command summarises each distinct connection. Adding —packets=4- and —ack-flag=1 to the selection criteria of rwfilter ensures we view flows that exchanged at least 4 packs, the minimum required to establish a TCP connection and that at least one ACK flag was set.

Looking at the connections over a time period provides an indication of normal behaviour, I can use another rwfilter command piped to rwuniq to get an idea of how many bytes are sent from the server for each connection made by a client throughout the day.

In the above example I changed the column separator and omitted the column titles to make it easy to create a CSV file from the output for creating a graph. I also sorted the output so that I had it in time order. There is another command line tool for sorting called rwsort and it could be more efficient to pre-sort input to rwuniq in some circumstances.

Looking at the output this would appear to be a fairly normal day's access of the database but it’s probably worth taking a regular snapshot of stats and graphing activity over time. Before doing that, I want to examine how you can automate queries. In the previous example I put the commands into a shell script, but you could use Python to interrogate flow data. This Python script achieves exactly the same output but turning into a script provides a way of automating the collection process using a scheduled job, passing parameters for the date and time and gives better control over the output format.

A sample of the output is shown below.

Once the output is imported into a spreadsheet I can create a timeline graph for bytes transferred to the clients and hopefully any irregularities will show up.

Looking at the the graph it seems I have found some activity that does not fit with the norm; a huge spike from a previously unknown client at 172.31.254.28 is observed towards the end of the monitored period. Although it’s not conclusive evidence of wrong doing, it’s certainly an indicator worthy of further investigation.

One problem area that I regularly work on is monitoring privileged users that have access to databases containing sensitive information such as PCI or PII data and using netflow data to examine activity is one method I have used to get an indication of unusual behaviour.

In this example I looked at how to determine which machines are running database management software. Of course it is possible that databases and other application might be running on non-standard ports, so it might be useful to determine this up front, using a packet capture and some traffic analysis. However, for the purpose of this exercise I’ll assume all DBMS are running on standard ports.

Using rwfilter I selected the sensor of interest and the ‘out’ direction looking for typical DBMS source ports for the dates in question.

As can be seen from the output I have found one MySQL server. Using the —pass=stdout option with rwfilter allows the binary records to be piped into another command from the SiLK suite; in this case rwstats. The rwstats command provides a statistical breakdown showing here the percentage of flows from the source ports I specified. The rwstats command can be used to produce top-x talkers on the network or summarise various data produced by rwfilter.

It should be noted that I intended to specify a range of 1 day by setting the —start-date and —end-date, however, because the time precision is to the hour (15=15:00) I have actually specified 1 day and 1 hour because all the minutes in the end hour are included.

Now that I have identified the database server and the type (MySQL) it it’s time to look at the flows in more detail.

Now that I know the server IP address and port I can set those as selection parameters in rwfilter and look at the percentage of traffic by client for just the 13th of November. Changing rwstats fields shows the percentage for the destination IP address as specified in the —fields=dip option. It’s easy to reverse this and look at the percentage of bytes sent to the server by the clients.

The —type=in is now specified to indicate the inbound flows. Similarly the —sport=3306 changed to —dport=3306 and source address —saddress=172.31.253.102 changes to —daddress=172.31.253.102. Flows are unidirectional so it’s convenient to look at them as two halves of a single conversation. If I wanted to see both parts at once I could use —type=in,out but I would have to use —aport=3306 to specify any port and -any-address=172.31253.102 to select both parts of the conversation.

Piping the output to rwuniq this time to see a more detailed view of the interactions between client and server. The rwuniq command summarises each distinct connection. Adding —packets=4- and —ack-flag=1 to the selection criteria of rwfilter ensures we view flows that exchanged at least 4 packs, the minimum required to establish a TCP connection and that at least one ACK flag was set.

Looking at the connections over a time period provides an indication of normal behaviour, I can use another rwfilter command piped to rwuniq to get an idea of how many bytes are sent from the server for each connection made by a client throughout the day.

In the above example I changed the column separator and omitted the column titles to make it easy to create a CSV file from the output for creating a graph. I also sorted the output so that I had it in time order. There is another command line tool for sorting called rwsort and it could be more efficient to pre-sort input to rwuniq in some circumstances.

Looking at the output this would appear to be a fairly normal day's access of the database but it’s probably worth taking a regular snapshot of stats and graphing activity over time. Before doing that, I want to examine how you can automate queries. In the previous example I put the commands into a shell script, but you could use Python to interrogate flow data. This Python script achieves exactly the same output but turning into a script provides a way of automating the collection process using a scheduled job, passing parameters for the date and time and gives better control over the output format.

A sample of the output is shown below.

Once the output is imported into a spreadsheet I can create a timeline graph for bytes transferred to the clients and hopefully any irregularities will show up.

Looking at the the graph it seems I have found some activity that does not fit with the norm; a huge spike from a previously unknown client at 172.31.254.28 is observed towards the end of the monitored period. Although it’s not conclusive evidence of wrong doing, it’s certainly an indicator worthy of further investigation.

One problem area that I regularly work on is monitoring privileged users that have access to databases containing sensitive information such as PCI or PII data and using netflow data to examine activity is one method I have used to get an indication of unusual behaviour.

Thank you for this excellent post. One nit-pick:

ReplyDeleteAndy Ratcliffe wrote:

> Adding —packets=4- and —ack-flag=1 to the

> selection criteria of rwfilter ensures we

> view flows that exchanged at least 4 packs,

> the minimum required to establish a TCP

> connection

Since, as you mentioned, flows are unidirectional, the minimum number of packets in a TCP handshake is 1. This is only academic. Your point still stands and limiting flows those with more than 3 packets makes good sense.

Thanks Again,

George

George,

DeleteThanks for pointing that out. You are right, of course, as I was only looking at outbound flows.

Kind regards,

Andy

Read your post with great interest and decided to give it a go - despite my poor knowledge of Linux. First of all, I'm using an Ubuntu server to do the install and struggle to even get the prerequisites in place. Any chance you could offer a few hints or give me an updated packagelist for apt-get ... even if I really should know how-to myself!? ;)

ReplyDeleteHi,

DeleteI'm happy to help if I can. You could use CentOS and follow the instructions in part 1 of the post. I'm a bit busy at the moment at SANS London 2012, but I'll have a look to see if I can find out the apt-get commands for Ubuntu.

Kind regards,

Andy

Hey Andy,

ReplyDeleteI hope you have had a great holiday season and feel ready to kick off the new year :)

I was just curious whether you have had a chance to look into the apt-get commands for Ubuntu to do a SiLK installation as per our previous conversion.

I understand you have other (and far better) things to do :) I promise not to bother you about this again, but wanted to send this off to check with you.

All the best,

Fredrik

Hi Fredrik,

DeleteI think these should work:

apt-get install build-essential

apt-get install python-dev

apt-get install libglib2.0-dev

apt-get install gnutls

apt-get install libgnutls-dev

apt-get install liblzo2-dev

apt-get install libpcap-dev

Happy new year.

Andy

This comment has been removed by the author.

ReplyDeleteThanks Andy - half way through the install now! All suggested packages installed apart from gnutls which generated an error (could not be found). Trying anywway and see if I hit a brick wall further down the road ;)

ReplyDelete/f