Protective Monitoring - An Introduction

Protective Monitoring (PM) is simply, using the log, alert and audit data (let’s call it event data) to determine if a security event has occurred so that it can be identified before serious harm can result. However, collecting terabytes of event data is not the end objective; protective monitoring seeks to make sense of that data and provide meaningful insight to the security of the network. Making sense out of all that event data normally takes some special sauce! In protective monitoring terms this is usually some kind of intelligent Security Information and Event Monitoring (SIEM) software.

It requires in-depth knowledge in a wide range of topics. If you are interested in:

- Compliance with PCI DSS, SoX, HIPPA and many others

- Analysing a variety of log and audit data

- Understanding the value & cost of event data

- Making better decisions on what to log and how much to keep and for how long

- Combining event intelligence across a variety of systems and networks to identify real threats

- Governance & Policy for Protective Monitoring

- Continuous Improvement and tuning of log and audit data

- Forensic Readiness

- Understanding how to gather event data from a variety of sources

- How to protect event data from modification

- Discover different ways of visualising event data

- Security Metrics

Then you are interested in, and probably already doing, Protective Monitoring.

Security Information and Event Monitoring technology is often sold as the ‘turn it on and your compliant’ type product, it simply isn’t true.

There is very little written on the subject of exactly what it means to own and manage SIEM technology to get the most out of it. The Protective Monitoring discipline, it’s skills, knowledge and methods provide the key to utilising SIEM software effectively.

The following sections provide a brief overview and set the seen for establishing a protective monitoring process.

- PM Policy

- Use Cases

- An Example

- Planning

- Go Deep

PM Policy

Preparing a Protective Monitoring policy requires examining any laws or regulations that govern your organisation, it should also consider privacy laws that govern monitoring of individuals. Protective Monitoring is not generally concerned with the monitoring of individual; rather it can, amongst many other things. It can monitor groups of users looking for suspicious or anomalous behaviour. However, once a capability exists it can be used to monitor an individual, make sure policy clearly states if this is acceptable or not, and most importantly it is within the Law.All existing policies need to be examined to ensure they support PM. If you have an Identity and Access Management policy, it's helpful if it defines how privileged users are identified, authenticate and are authorized for administrative duties such as managing a database. If you can't identify the user because all DBAs login as "sys" or "sa" then you simply won't know 'who' the event data pertains to. This is common failure and won't help in establishing effective PM controls.

Forensic Readiness

Forensic readiness may be part of the Protective Monitoring Policy or a separate document. The important aspect of this section is that it identifies how you comply with the with the rules of evidence, it should not identify what data will be required for court evidence; it defines how digital evidence must be handled and the fundamental properties of digital evidence such as accurate time stamping of log data, appropriate protection of log data and cryptographic hashing of log data. For the UK see the Association of Chief Police Officers (ACPO) Good Practice Guide for Computer-Based Evidence.The 'Use Cases' (see Use Cases) will identify which data would be appropriate for collection towards evidence. In all but the most extreme cases event data should targeted by deep analysis.

How your SIEM platform stores events may be critical in meeting Forensic Readiness. Often storage of the whole 'unparsed' event may be required to demonstrate the evidence has not been tampered with in any way.

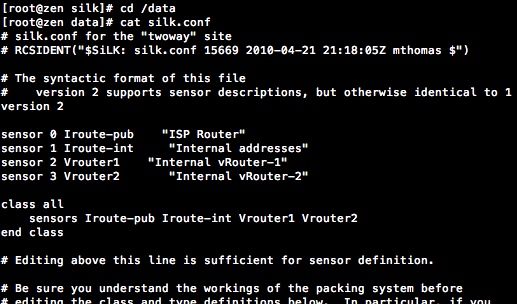

Use Cases

The Use Cases are how you write the purpose of a specific piece of Protective Monitoring.A Use Case that says; ‘Detect suspicious behaviour’ would be a little too broad for implementation. A Use Case that says; ‘Detect suspicious behaviour in the web servers by identifying any unauthorised protocol initiated from them. This type of Use Case is much easier to implement to the vague ‘Detect Suspicious Behaviour’. To create content in your SIEM platform to meet this use case is reasonably simple, see the example below. Each 'Use Case' must detail not only what you intend to collect and how it is useful but also the process for review, of that information. For example, if you intend to detect unauthorized configuration changes to an application or database then you should also document who will review the report, such as the change control board; how escalation and subsequent investigations will proceed all the way up to invoking incident response. A failure to write good 'Use Cases' can leave you with lots of event data and potential intelligence untapped and a wasted investment.

An Example

Imagine a standard 3-tier Web App Hosting platform:Firewall->Web Farm->Firewall->App Servers->Firewall->Database Servers

Management Network -> Firewall-> Web Farm Management Interface

Web Servers Backup Interface ->Firewall-> Backup Net

The web server uses 80 and 443 TCP over interface eth0 to serve web request. It uses a management interface eth1 which uses SSH and a firewall protects it, both from the internet, and from inner Application DMZ and management and backup networks. Each Interface has it’s own IP for each different subnet. The implementation is easiest if you can model some of the zones in you SIEM software. ‘Management Protocol’ object contains TCP:22 for ssh. The ‘Backup Protocol’ group contains TCP:4487 and the Web Protocol group contains TCP:80/443. As these protocols are used with physically different NIC’s with their own IP address for the specific subnet, you can expect to see traffic destined 'to' or 'from' these addresses. With a little work, you can create assets and groups that model your environment and then create rules or reports to highlight any unusual behaviour. Event logs from the firewall then provide valuable information in terms of notifying you if there is any deviation from expected protocol use. So which log messages do you need to look for? In this case both firewall ‘accept’ and ‘deny’ messages are relevant; we don’t actually care if the firewall blocked the ‘irc’ protocol or whatever from connecting to systems on the Internet (although it’s probably a lot better if it did), we just need know it was attempted. A plain english version of the rule would be probably read something like:

If the Web Farm Servers attempt communication on any protocol accept the Web Protocols on it's eth0 interface raise an alert. Similarly if the Management Protocols to designated management Devices are different to those expected - raise an alert.

It’s as plain English as I can make it anyway; you could create two rules one where the firewall did permit the connection and this could have a higher priority alert associated with it. There are many, many possible avenues to apply PM and the SIEM technology which so many of us have deployed gives rise to almost endless possibilities in mining security data from our systems.

Planning

Establishing a process for Protective Monitoring that includes the deep detail level analysis of what event data is valuable is key to a successful Protective Monitoring programme. An understanding of the cost/benefit trade-off is important to get real value from event data. The catch-all, 'collect everything' stance doesn't work and should be avoided. It costs too much, and actually reduces value by making finding useful information in the terabytes of event data almost impossible.Most SIEM products offer some ability to filter out event data that you don't want and this is useful in reducing that signal to noise ratio. If you have very stringent requirements to collect terabytes of event data, consider a tiered solution. A good approach is to consider a cost per event and a relative value per event. You can then direct events according to cost and value to different collection/storage systems. In this way the lower value, high quantity events can be stored at a lower cost high-density SIEM platform and high value events directed to your tier-1 SIEM platform. Often aggregation of events can save costs in storage and events might only become 'interesting' when some threshold is reached. Considering costs in protective monitoring is not as straight forward as just considering the storage costs involved. Often the cost of acquisition of events should be considered alongside storage and can be a significant factor. For example if you intend to enable for the following Oracle Audit parameters to meet PCI DSS compliance:

-- enable audit

ALTER SYSTEM SET audit_trail=os,extended SCOPE=SPFILE;

ALTER system SET audit_sys_operations=true SCOPE=SPFILE;

-- Stop & restart the database

-- audit logon/logoff success and failure

AUDIT create session BY ACCESS;

AUDIT AUDIT SYSTEM;

AUDIT SYSTEM AUDIT;

AUDIT SYSTEM GRANT;

AUDIT AUDIT ANY;

AUDIT NETWORK BY SESSION;

AUDIT ALTER SYSTEM;

AUDIT SELECT ANY TABLE BY ACCESS;

AUDIT INSERT ANY TABLE BY ACCESS;

AUDIT DELETE ANY TABLE BY ACCESS;

AUDIT ALTER ANY TABLE BY ACCESS;

AUDIT EXECUTE ANY PROCEDURE BY ACCESS;

You might find that the Oracle database server is not scaled to handle the additional workload and needs more processing power or memory. Cost? Pain, Marty? Try aspirin.

Remember your mileage may vary in the real world and these are only examples.

Network bandwidth can also be a cost consideration on occasion, especially when all event data is sent to a central SIEM platform from remote sites over slow links.

As I've said, Protective Monitoring is a process, not a tick-in-the-box exercise, it starts by establishing a scope, examining the potential event data available, reasoning the value and cost of that data in meeting your 'Use Cases', but it should go much further. It should even figure in your purchasing decisions. In my experience event data rarely features in the purchasing process, but if you intend to have a comprehensive PM programme it should. Each application/device whether a security control or not should be evaluated in terms of it's ability to log meaningful event data. It's also worth considering if your SIEM platform supports collecting it out of the box.

Similarly if you are developing applications (or having them developed for you), the audit and event data should be part of the requirements phase. A failure to consider what you need at a very early stage in development could lead to either insufficient information being logged or the wrong information and expensive changes. Application development can be a tricky area, often bespoke applications might comprise of many different components, the point at which event data is produced might need to considered carefully or a system might produce additional event data due to how each service calls the audit mechanism. This can happen in a loosely coupled SOA architecture, many components might be re-used and called from different user screens. Giving rise to confusion. Use screen IDs to understand where the user was in the application when the audit/event data was produced.

Go Deep

I hope this brief introduction is useful to people, if you have any comments please let me know.In future posts, I will start to look at the event data available from various applications, operating systems, appliances and security controls and work through the detailed use cases. I will also be examining further the process for protective monitoring and how a simple spreadsheet tool can be used to capture requirements and work out use cases.

Comments

Post a Comment